Architecture design is responsible for dividing an overall system into manageable pieces, which can be independently worked on. The subsequent build and integration process is responsible for putting the pieces together to form intermediate stages and finally the overall system. Additionally, more and more integration of software systems of all types happen. Nevertheless, little attention is still paid to integration testing. We elaborate why architecting and integration testing should be highly connected activities and which best practices allow integration testing to be effective in practice.

Software is everywhere. Smartphones, smart homes, or our automobiles are just some examples where software or apps play a major role. Nearly everywhere, software is the driver for innovation nowadays.

All kinds of systems are becoming more and more interconnected and integrated. While software was often developed individually and in isolation in the past, with a lot of effort spent on integration, there is now a strong trend towards greater integration of all types of systems. Some of the reasons for this trend are that

- embedded systems and information systems become more and more integrated;

- mobile devices play a major role regarding further apps to be integrated;

- more and more “things” are becoming integrated into the Internet of Things (IoT);

- software from multiple companies, often from different domains, are being integrated into new ecosystems.

It is surprising that despite this great attention to integration, little attention is still paid to integration testing. Today’s systems do not only integrate all types of systems, they also do so over time, throughout the entire development process, and not only once.

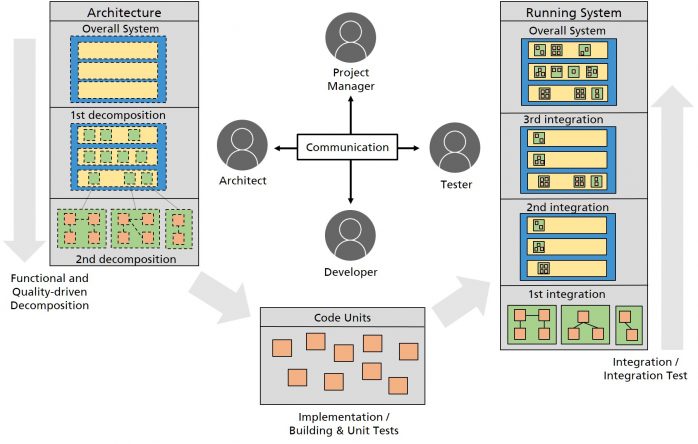

Architecture design is responsible for dividing an overall system into manageable pieces. For example, a system can be decomposed into three layers, each of which is then further refined. Each component can then be worked on widely independently by the development teams. The subsequent build and integration process is responsible for putting the pieces together to form intermediate stages and finally the overall system. Integration can start, for instance, with the composition of various components, followed by the integration of components into a cluster in order to check a general functionality. Next, the layers can be completed until the entire system is fully integrated. Thus, an integrated view on architecture and integration testing is a natural fit, which is the topic of this article.

During the integration process, quality has to be checked, especially with regard to interfaces, communication, and collaboration between components or system parts. This is not a trivial task, as the complexity of software systems grows fast, particularly as a result of stronger integration into whole ecosystems. The intervals between release cycles decrease while the quality requirements increase. In addition, resources in terms of budget and/or available architects, developers, or testers are often limited.

The literature about testing often only scratches the surface of integration testing and describes, for instance, different integration strategies (e.g., top-down integration) or discusses specialties such as object-oriented characteristics and their influence on integration testing [1-4]. Dedicated books on integration testing are rare [5] and often leave the reader not knowing where to start. There is also some research into how integration testing can be connected more closely with the architecture [6]; however, the results are not really mature yet. All of this means that integration testing is recognized both by practitioners and researchers as a fundamental pillar of quality assurance, but there is still limited support for its realization.

It’s all about integration – but integration testing is often neglected!

New development paradigms, such as DevOps, pursue goals such as higher quality, higher release frequency, and faster releases. One key aspect in this context is to reduce “silo thinking” among different people throughout the development cycle. We continue this principle by motivating a stronger connection between the architecture and integration testing. We will show how to do architecture-centric integration testing so that integration can be mastered.

Why is there often no helpful Integration Test?

The value of an integration test is often not recognized yet today. Often, the consequences of integration testing being neglected are slipped defects, inefficient quality assurance, bad communication, or unmotivated testers, and ultimately a software product that does not have the demanded quality or on which more resources are spent than planned. On the one hand, this leads to unsatisfied customers of a software product and, on the other hand, to much higher costs of the software development.

- What happens when no integration testing is performed?

When integration testing is omitted, integration defects can only be found in later testing activities such as during system or acceptance testing. However, as the internal structure of the system is not considered during such testing activities and as interfaces and communication paths are not known on this level, it is very difficult to detect such problems. The likelihood of overlooking such problems is thus very high.

Software systems are becoming more and more complex. Both individual software and more closely integrated system landscapes and ecosystems grow (e.g., in lines of code) and often provide many different services. A piece of software might offer several implemented workflows, have access to different databases, and offer its service over different channels. If such software is also available on mobile devices, communication between the mobile device and a backend has to be checked. If thousands of users are able to access the backend at the same time, performance issues have to be considered early on. All these challenges can already be considered during integration testing.

Without dedicated integration testing, complexity cannot be handled in a satisfactory manner. Typical defects that can be found in an integration test will go unnoticed. - Why is there frequently no dedicated integration testing?

Often small system parts are checked during a module test and then “thrown together”, and a system test follows. Reasons for omitting the integration test may be that the software system is assumed to be rather small and that the quality seems to be under control. It is assumed that integration problems will somehow be found during a later system test, which often does not happen. As resources for testing are limited, no time is spent on dedicated integration testing, as more popular unit and system testing often also consume much effort. Especially when deadlines are tight, integration testing seems to be the first thing to be neglected. Furthermore, nobody might feel responsible for integration testing, as developers take care of their components and testers often check the entire system. A lack of knowledge of either the system or testing, or both, may also lead to no integration testing being done at all, or to it being done inefficiently. - How can the tester influence the integration test and the architecture?

The integration tester usually has specific testability requirements in order to be able to perform effective and efficient integration testing. Moreover, a tester often knows about typical functional and non-functional problems that might occur, so that he/she can give early feedback on what might be missing in the design, or what is ambiguous.

However, testers are often not invited to early requirements or architecture meetings where such aspects are discussed and defined. Consequently, testability requirements are often not considered, and when the tester starts defining a test strategy and setting up an environment for tests, testability is not considered with the necessary rigor. Integration tests then become inefficient, and some tests might not even be possible. The frustration for integration testers grows, and the motivation to perform a rigorous integration test decreases. - What is the basis for the integration test?

One main source for a test basis of the integration test is an understanding of the architecture. Such an understanding (as well as documentation) is often missing among testers, or is of low quality. A suitable strategy for overcoming this problem is to directly communicate with the responsible architects. Unfortunately, they are often not available, are available too late, or have a silo mentality that hampers collaboration. - How to avoid wasting effort during the integration test?

In order to perform an efficient integration test, the automation potential has to be determined and automation concepts need to be implemented. A recent software test survey (see http://softwaretest-umfrage.de/) has shown that there is surprisingly little consideration for test automation during integration testing. This means that high optimization potential exists for the integration test, for example with regard to a mentality of more continuous delivery to ensure high quality.

It is about an overall quality assurance strategy that explicitly considers integration testing.

One major step towards improved integration testing is a stronger connection with the architecture and greater consideration of integration testing as part of an overall quality assurance strategy. We call this architecture-centric integration testing. Several best practices can help to move in this direction. These best practices cover who is involved how, and which benefits may result.

Best Practices for Architecture-centric Integration Testing

Best Practice 1 – Explicit definition of the integration test: Our first recommendation is to explicitly integrate integration testing into a quality assurance strategy. The goals and the focus have to be defined, resources have to be allocated together with a project manager, and testability must be discussed with the architects early on.

Best Practice 2 – Improving the architecture and sharing knowledge: Stronger integration of integration testing and architecture has to be lived. This is enforced by dedicated communication taking place between the architect and the tester responsible for the integration test. Of course, the integration tester has to think about how to do the integration test, and in which order the integration of the system parts should be done. An early review of the architecture by the tester, which can then be used as a test basis, ensures high quality of the architecture early on. Furthermore, the tester thus acquires knowledge about the architecture early on, which he/she can then use to define an improved integration testing strategy, defining the order of the components and system parts for the integration test. Besides communication and interface aspects, which are in the focus of an integration test, non-functional properties such as performance or security could also be checked early during integration testing.

Best Practice 3 – Ensuring testability early on: Testability requirements should be discussed very early between the tester and the architect (see also [7]). Incorporating testability later can be very expensive. The adequate trade-off between testability and further properties and requirements has be discussed. Different test steps can be supported early on through reasonable conceptual decisions regarding the creation of the starting situation for the test, by ensuring preconditions, bringing test data into the system, or checking test results and post-conditions. Therefore, a system needs appropriate interfaces. We can distinguish between interfaces for stimulating the system and interfaces for observing something. One concrete example is a graphical user interface. During integration testing on a higher level, graphical objects have to be identified, stimulated, or manipulated. To do this, such objects have to be identified by a test tool, ideally independent of their position or size. Therefore, a unique numbering schema for such objects has to be defined early to ensure testability. Another example are databases that provide test data or where results are checked, and which have to follow a pre-defined and unique database schema. Such test databases must be able to connect to the system under test. Thus, a clear definition of such interfaces must be considered.

Best Practice 4 – Early planning of integration and integration testing: It is often unclear in which order components should be integrated and what should be tested during an integration test. An integration graph can support an integration tester in creating an explicit concept of the integration test at an early point in time. We assume that there is usually no big-bang integration, but rather a well-defined, step-by-step integration.

Such an integration graph contains all the software modules of the current system and also such modules that are known to be developed in the future. A definition of the order of how the different parts are integrated can be sketched. Furthermore, it can be discussed explicitly which tests are necessary at which integration step and what the prerequisites are for creating the technical environment. This discussion should again take place between the tester and the architect. The architect has detailed knowledge about the system and the technologies, while the tester can contribute knowledge on how to perform testing activities and can assess how much testing is enough. Such an integration graph can be visualized directly on architecture models or, alternatively, a dedicated graph can be derived by the tester, as shown in the following figure.

In the figure, three backend and three frontend components are shown that offer a news functionality. Of course, different possibilities exist for integrating for these components. In the given example, the news controller and the news view are integrated first in the frontend (step F1) and are then integrated with a network transfer component in step F2. At the backend, a news repository is integrated with a database in step B1, which is then integrated with a news service (step B2). Both subcomponents are then integrated in step F3-B3. Some test information is also sketched, showing who should do the test, how risky the integration is rated and how important the integration test is therefore, and whether it is a new test or a regression test. What is depicted in addition is that the integration step F1 is marked with a red circle, meaning that this integration leads to a new integrated component, which will be considered as “one part” in future steps. At the backend, the components “news service” and “news repository” will also count as one integrated component in the future. As the integration order at the backend was different, however, meaning these two components were not integrated directly, a “virtual” component B2* is shown there for further modeling steps.

In conclusion, such a representation helps to make the integration explicit, provides a basis for discussing different possibilities, and helps to understand how the integration is done. The annotations serve as hints as to what is important to consider in such an integration test.

Best Practice 5 – Identifying typical integration problems with integration patterns: Besides the integration graph, which supports making integration strategies explicit early on and indicates how to perform the integration test, integration patterns cover concrete solutions for different challenges and problems encountered during the integration and the integration test so that defects are avoided, respectively found earlier.

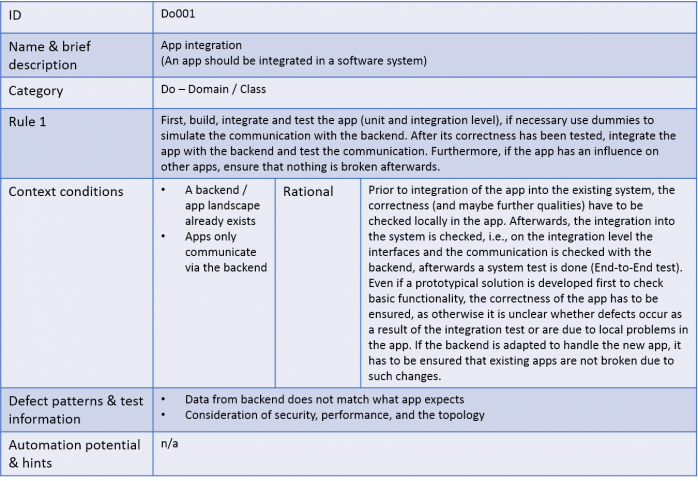

For example, imagine you want to build a new app and integrate it into an existing app suite. A simple rule can be to start by checking the correctness of the app itself, continue by checking the communication using dummies if needed, and include the concrete backend afterwards to perform an end-to-end test. Such a rule presents an initial procedure regarding what has to be considered. Of course, rules might be refined further by a tester in order to be operational with respect to certain technologies. Relevant context conditions describing under which circumstances the rule is applicable can be added, such as communication via a web interface. A rationale for the above rule explains why the steps should be performed in the described order. Such a rule is accompanied by concrete defect patterns and test information to be considered, e.g., consideration of further qualities such as security or performance, or checking whether data from the backend matches what is expected by the app. Together with some classification and identification information, the full example can be seen in the following figure.

Several such integration patterns have been collected by Fraunhofer IESE, for example login mechanisms or integration of different apps into a new or existing software system landscape. Further context-specific patterns might be created to support a concrete environment. Knowledge is encapsulated by experts and can be used during the development of software and help in the early consideration of potential problems to optimize the definition of the architecture or to design more adequate integration tests. With our integration patterns, several obstacles are presented explicitly so that early response is possible.

Best Practice 6 – Improving architecture documentation: With stronger involvement of the testers and more feedback given by them, the architecture documentation can be improved. The need of the tester to have a suitable architecture documentation forces the architect (in a positive sense) to document those aspects in the architecture document so that the needs of the tester are satisfied.

Best Practice 7 – Striving for higher automation: Release cycles are getting ever shorter due to business reasons. New features are released faster. Throughout all this, the quality should remain high, of course. Automation during quality assurance is an essential way to support these requirements, and strong automation of integration tests is a key aspect. New paradigms such as DevOps, where continuous integration and delivery play a crucial role, force testers to provide a high degree of automation. Achieving high deployment frequency typically affects the architecture because teams have to develop and release their software independently (e.g., in Microservice architectures). With every commit, regression tests have to be performed. Automated regression tests for integration testing are thus a must to prevent defects going into production.

How to improve integration testing

The improvement of integration testing should begin with an analysis of the current situation: figuring out how well it is being done right now and what the improvement potential is. Aspects to consider are:

- The different roles that exist along the software development process and the concrete communication paths help to understand the current quality and the potential for stronger integration of architecture and testing. This can start with a small agile team, but huge departments or several teams could also be involved. For each situation, different challenges might be identified where solutions have to be derived to optimize communication.

- Another aspect is the current alignment of architecture and integration testing during software development. Do architects create documentation that can be used by the tester to derive test cases for the integration test? Does the tester give feedback regarding the architecture? Is the architecture designed in such a way that testability is ensured? These and further questions can be answered by analyzing the concrete environment regarding the integration of architecture and integration testing.

- When a company develops several products or when an ecosystem consisting of several companies exists, an analysis of the degree of integration of the different software parts can lead to insights about testing, maintainability, or updates.

- Trends such as becoming more agile, introducing DevOps practices, or implementing a highly automated continuous integration, deployment, and delivery pipeline provide new challenges for integration testing. One main issue is the high degree of automation of the integration test. Therefore, an analysis of how well the integration test is automated, where new potential for greater automation lies, and whether one has automated the right set of tests can help to identify areas of improvement for the integration test.

Integration testing only gets better with concrete improvement steps.

- This starts by embedding integration testing into a holistic quality assurance strategy.

- Clearly defining who is doing what in which testing step helps to make integration testing as well as the entire quality assurance more efficient.

- Quality gates and KPIs can ensure that the quality can be controlled better.

- A review of the architecture should be done.

- With support from a simple integration graph, the integration and its test strategy are made more concrete early in the development process.

- The order for the integration of the software parts is clearly defined and can thereby be better controlled and further adapted when needed.

- The respective focus during different integration tests can be annotated, which results in higher quality of the product.

- Typical integration patterns can be considered early.

- Depending on the concrete environment, predefined integration patterns can be used directly once the architecture has been defined and the integration test has been planned.

- Defect patterns captured in such an integration pattern can be used during the integration test to identify typical integration defects.

- New integration patterns can be created in addition to cover further context-specific problems and to consider context-specific goals.

References

[1] I. Burnstein, Practical Software Testing, Springer, 2003

[2] P. Liggesmeyer, Software Qualität, Spektrum Akademischer Verlag, 2009

[3] A. Spillner, T. Linz, Basiswissen Softwaretest, dPunkt Verlag, 2012

[4] E. van Veenendaal, D. Graham, R. Black, Foundations of Software Testing ISTQB Certification, Cengage Learning Emea, 2013

[5] M. Winter, M. Ekssir-Monfared, H. Sneed, R. Seidl, L. Borner, Der Integrationstest, Carl Hanser Verlag, 2013

[6] P. Clemens, SEI Blog, https://insights.sei.cmu.edu/sei_blog/2011/08/improving-testing-outcomes-through-software-architecture.html, 2011

[7] C. Brandes, S. Okujava, J. Baier, Architektur und Testbarkeit: Eine Checkliste (nicht nur) für Softwarearchitekten – Teil 1 und Teil 2, OBJEKTspektrum Ausgaben 01/2016 und 02/2016

Michail Anastasopoulos sagt:

Hello guys,

thanks for this very nice article.

Your hypothesis is that integration testing is often neglected. And your solution approach is definitely interesting.

However I have made the opposite experience: Integration testing is often preferred against module testing, because the latter is considered too complicated or simply useless. Well now, I fully agree that this might be a very sad truth, but there can be practical reasons for this.

Consider a rather simple but very usual example: You have an order component whose job is to save orders in the database. You will often find people testing such a component directly on the integration level and not on the module level. That means, they write tests that need a running database, along with test-data in order to execute. The test then asserts for example that orders are saved successfully in the database. Clearly an integration test. A module test would of course test the component in isolation using techniques like mocking to deal with the dependency to the database.

Isolating components from their dependencies in order to run module tests are often considered too high burden. Hence, developers often neglect them and move on directly to the integration tests. The latter are of course harder to maintain, and here is where you approach can be particularly valuable. Apart from that integration tests also take much more time to execute, which in turn often leads developers to write only a few of them…

As you already mentioned, testability is here the key. Writing module tests requires testability, which is very often missing from current systems.

Looking forward to your opinion.

Best regards,

Michail Anastasopoulos

Dr. Matthias Naab sagt:

Hello Michail,

thank you very much for your comment and the valuable viewpoints.

First of all, we fully agree that the example you mentioned is absolutely usual in practice.

However, what is missing in the example of the component with database access is the following: There is no deliberate integration of the component(s) containing the business logic and the respective technical component, namely the database. Rather, the integration happens arbitrarily because it is in that case the easier way out, exactly as you mentioned.

Coming back to the idea of integration test, something essential is missing: Intentionally testing the integration of different components using the (architectural) knowledge about their communication and mutual assumptions. In the example, this would include testing failure cases related to the technical properties of the database and how the components containing the business logic react to that.

We would like to use another special case to support our argumentation: The system test of the overall system is clearly an integration test, as well. It integrates all single components of a system and it is also widely applied. However, it is done to check the overall behavior of the system. Again, this neglects the intention (and often the possibility) to use the knowledge about the component interplay to identify problems that otherwise are not found.

We are looking forward to your opinion about these thoughts.

Best regards,

Matthias

Michail Anastasopoulos sagt:

Hi Matthias,

yes, you are absolutely right. Integration testing should be used intentionally and its only purpose should be to test integration. This is indeed neglected in practice. Developers integrate just in order to be able to test. But the tests possibly do not focus on the integration aspects such as those you mentioned. Sounds like a paradox doesn’t it?

And indeed, system integration is supposed to test the system as a whole but the employed tests might not test for example how integration failures amongst components propagate across the system.

Therefore your approach can be very helpful here. Keep up the good work!

Best regards,

Michail

julia sagt:

Hello,

Thanks for writing this detailed article dedicated to Integration test, a lot of interesting points here! There is one topic though, which is not discussed a lot and that’s the KPIs part.

So once we’ve come to understand the importance of the Integration tests, and how to implement them, now, how do we measure them, their effectiveness, their completeness? This brings me to test coverage metric, which is somewhat controversial, as many believe test coverage when it comes to Integration tests is not achievable/meaningful. What is your view on this and what could be measured about Integration tests to attest that they do exist and that they do or don’t just yet cover important functional connections? Obviously defects, but do we have other powerful KPIs here?

Just as a notice, I totally support the assertion that test coverage alone does not guarantee that a feature is bug free, just like in the context of unit tests. However coverage is a good metric when you start from zero, to analyze a positive trend : if your set of tests grows and covers the different areas of your units or components , or integrated parts then little by little quality assurance grows too even though it does not end with a 100% coverage.

Best regards,

Julia Denysenko

Dr. Frank Elberzhager sagt:

Dear Julia,

thank you very much for your comment!

I agree with you that coverage is meaningful, but it is more an indication as you also already pointed, and it the meaningfulness depends on the concrete coverage metric. Similar to the unit level, where statement coverage is a rather weak coverage criterion, branch coverage is already stronger there. For integration test, this is also true, and the concrete coverage criterion depends to a certain extent on the concrete interface. For integration test, for example, you can just test whether data is send correctly via an interface, but you also can use different kinds of data (similar to equivalence partitioning) to check the interface in more depth. You can also consider an interface with respect to performance and to increase the load over an interface. For all these different cases, the architecture is a strong and meaningful source, and of course, you also can take the architecture for defining the level of coverage you want to achieve.

These examples bring me to another point: It is not only about KPIs, but about the integration strategy (which is part of a test or quality assurance strategy) and that is where one should invest some effort: Think clearly in which depth the interfaces should be checked, and think about the order of integration. Such a strategy can then also be monitored (and is therefore also somehow a (process) KPI) that ensures that the integration test is done in a structured way. Complementing such a process KPI with coverage numbers and defect numbers, I agree that the level of quality can be monitored in a reasonable way. By the way, it is obvious that 100 % coverage is not necessarily the ultimate goal, and might often not be achievable except with tremendous efforts; moreover, 100 % coverage does not mean free of defects. So a decision must be taken (within the quality assurance strategy) which level of coverage should be achieved, and, as already pointed out before, how concrete coverage numbers can be interpreted.

preetiagarwal sagt:

I read the whole blog post now, and although the title sounds provocative,

Thanks a lot for sharing the ideas

Dr. Frank Elberzhager sagt:

Dear preetiagarwal,

thank you for reading our blog post, and thank you for your comment. If you would like to discuss any aspect in more detail, feel free to submit another comment.