Enhancing datasets by adding input variations or data quality deficits is often done using data augmentation approaches. These approaches employ classical image processing techniques, Deep Learning models like Convolutional Neural Networks (CNNs), or Generative Adversarial Networks (GANs). With the rise of Generative AI (GenAI) and its impressive image generation abilities, GenAI appears promising for data augmentation. We used ChatGPT 4o to augment images of traffic signs and compared the results to an augmentation framework [1][2] we developed a few years back based on classical image processing.

Autorin/Autor des ursprünglichen Artikels sind: Lisa Jöckel und Daniel Seifert

What is data augmentation?

Data augmentation creates new data points from existing data. It is used to enhance a dataset without collecting new data. When data augmentation is applied, the original data is changed or transformed such that we still know the annotated label (i.e., the ground truth). For example, if you rotate an image of a butterfly, it still shows a butterfly. This has the benefit that the additional data points do not have to be labeled manually.

Data augmentation is often used on image data with transformations such as rotating, flipping, or cropping the image, or adding noise to the pixel values. This way, training a Machine Learning (ML) model on an augmented dataset can increase the robustness of the model to these kinds of input variations during the later usage of the model.

Another application consists of augmenting the test dataset. Usually, it is best to have real data points for testing. However, some situations do not occur frequently, so they are difficult to gather during data collection but are still important during model testing. For example, it does not snow very often in Germany, but you would still want to test whether your pedestrian detection model goes off the rails if the input images have a lot of white areas. Thinking about which factors might influence the image appearance and hence possibly the model outcome is a useful approach when it comes to selecting the kinds of data augmentations to be considered [1].

How can images be augmented with input variations?

Many image augmentations can be achieved with classical image processing approaches. Affine transformations like rotation, flipping, or scaling use matrix multiplication and vector addition. Noise is commonly added pixelwise, and hue or brightness can be adjusted through operations in the color space. Images are usually interpreted in the RGB color space, meaning each pixel has three values (sometimes four, with an additional opacity value) for red, green, and blue, with values between 0 and 255. If all values are zero, we get black. If all values are 255, we get white. However, the RGB values can be converted to other color spaces like HSV (hue, saturation, value) or HSL (hue, saturation, lightness), where hue or brightness can be adjusted more easily.

Variations based on image processing can also be combined in compositions representing more complex variations (see more in [2]). For example, rain might be implemented by adding lines to the image, or dirt on the camera lens by adding a semi-transparent brown and noisy layer.

Another option is neural style transfer [3], which uses Deep Learning approaches (i.e., convolutional neural networks or generative adversarial networks) to apply a certain style to a given image. For example, this allows transforming an image taken in a summer setting into one in a winter setting (e.g., [4]).

Furthermore, Generative AI (GenAI) like OpenAI’s GPT-4o and Dall-E opens further possibilities to augment and enhance image datasets.

What are typical pitfalls in image augmentation?

Degree of realism: Data augmentation takes a dataset of real images and transforms them artificially. Using a real dataset keeps the intrinsic realistic variations, which is better than fully artificial data (e.g., from simulations). However, depending on the photorealistic qualities of the artificial transformations, the result might still seem unrealistic. For example, to augment multiple images with rain, adding the same vertical lines with the same length and direction to all images might lead to the ML model learning the line pattern. In real images, there are variations in drop length, or the wind direction and velocity will influence the direction of the drops.

Dependencies between multiple input variations: There might be multiple input variations that need to be applied together (e.g., if it is raining at night, we would want to add a rain augmentation to the image and, additionally, darkness). Here, two things need to be considered: The first is the order in which the augmentations are applied. For example, when first adding an augmentation for dirt on the camera lens and then adding lines as raindrops for rain augmentation, we accidentally put the lines in front of the dirt despite the dirt being nearest to the viewer (i.e., the camera) in the line of sight. The second thing to consider are dependencies between the augmentations. For example, traffic signs reflect light at night when hit by a car’s headlights. Hence, the image area of the traffic sign is brightened while the background is darkened. If, however, there is dirt on the traffic sign, it does not reflect as strongly in the dirty areas, which means that we should not increase the image brightness as much for the affected pixels.

Predictability of results: Using classical image processing techniques has the benefit that the developer has full control over the appearance of each input variation. When using Deep Learning approaches or GenAI, there is less effort in designing and developing the variations, but as they are data-driven approaches, we cannot fully control whether or not we will get the expected outcome.

Replicating the augmentations with GenAI

A few years ago, we developed an augmentation framework for images using the use case of traffic sign recognition [1][2]. Traffic sign recognition aims to classify the type of traffic sign on images typically created from the bounding boxes around the signs. The bounding boxes might be determined in a previous step by a traffic sign detection model. Therefore, the images are rather small, i.e., in the German traffic sign recognition benchmark (GTSRB) that we used, images vary in size but are mostly smaller than 100×100 pixels. The augmentation framework uses classical image processing, aims to achieve a high degree of realism, and considers dependencies when multiple input variations are to be applied to the same image. Developing such a framework for a specific use case is rather time-consuming. As we were curious whether GenAI can do the augmentations itself, or at least assist in getting better results, we experimented by trying to replicate some of the variations from our augmentation framework.

Below are some basic input variations that do not combine multiple variations. The original image was given to ChatGPT 4o (which also has access to Dall-E 3) and prompted with “Add [input variation] to the original image” or “Adjust the original image as if it were taken [describe input variation]”:

Rain and haze look okay so far. For darkness and backlight, the brightness adjustments were done to the whole image without distinguishing between the sign and the background. At night, when a car shines its headlights onto a traffic sign, the sign becomes significantly brighter while the background remains dark. If there is backlight, the sign should not be brighter but darker to balance the brightness of the backlight. Motion blur is just a general blurriness instead of a directed stepwise blur. Neither dirt or steam on the lens, nor dirt on the sign seem to have any effect on the image.

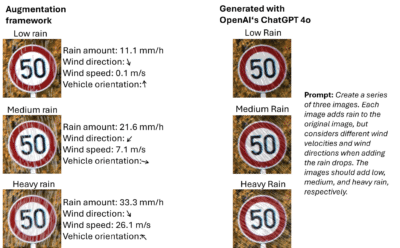

Regarding realism, the interplay between wind velocity, wind direction, and driving direction is considered in our augmentation framework when augmenting rain. We prompted ChatGPT-4o with “Create a series of three images. Each image adds rain to the original image, but considers different wind velocities and wind directions when adding the raindrops. The images should add low, medium, and heavy rain, respectively.” The result is depicted below:

Getting different intensities of rain worked quite well. Even the task of varying the directions of the raindrops due to wind was understood by the model.

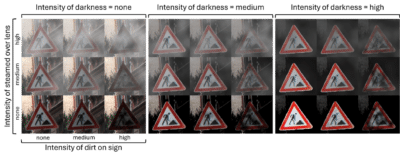

A key asset of our augmentation framework is the consideration of dependencies between multiple input variations. For example, dirt on a traffic sign does not reflect as much light as the sign itself, or a steamed-over lens affects the image by blurring and brightening but when it is dark outside, we have less brightening. We illustrated this in [1] with the following image matrix combining three input variations (i.e., darkness, dirt on the sign, and steam on the camera lens) in increasing intensities:

With ChatGPT 4o, we tried to replicate the combination of these three input variations, resulting in:

Although we provided explanations in the prompt, the result was not what we expected. It seems to have applied a triangular mask to separate the sign and the background but with the wrong rotation. The background does not darken the original image but is replaced by a gray gradient.

We also tried to use GenAI for input variations that are more complex to develop properly with classical image processing:

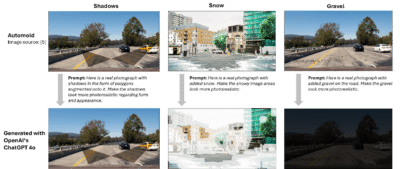

As it takes time to implement realistic augmentations with image processing techniques, it might be interesting to produce simplified results with controllable techniques and then ask GenAI to make it look more realistic. In the Automold library [5], there are simplified augmentations for shadows as darkened regions in random polygonal shapes, and snow is simulated by brightening image areas. We asked ChatGPT 4o to make them more realistic, but did not get the results we had hoped for:

Further articles on the topic of Generative AI and Large Language Models:

- Retrieval Augmented Generation: Chatten mit den eigenen Daten

- Prompt Engineering: Wie kommuniziert man am besten mit großen Sprachmodellen?

- Die Zukunft des Sprachassistenten: Datenhoheit durch Spracherkennung mit eigenem LLM Voice Bot

- Large Action Models (LAMs) nutzen neurosymbolische KI – Die nächste Stufe im Hype rund um Generative AI

Using Generative AI instead of image augmentation

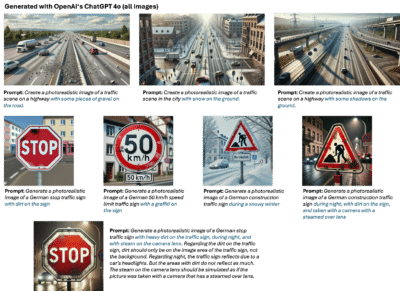

Asking GenAI models to directly generate a certain scene or traffic sign with the desired input variations might also be an option. However, this leaves very much room for interpretation to the model, and it might be a lot of effort to craft the prompt. Furthermore, the likelihood increases that some aspects of the generated content will not properly reflect reality:

However, some results are quite good, but are partially still distinguishable from real photographs due to their general appearance or generation artifacts:

We did not examine other GenAI tools like Midjourney or Adobe Firefly, which might also be interesting to do in the future.

In the Data Science team, we focus on topics concerning the quality of AI-based solutions.

Our solution offerings as PDFs:

References:

[1] Jöckel, L., Kläs, M., „Increasing Trust in Data-Driven Model Validation – A Framework for Probabilistic Augmentation of Images and Meta-Data Generation using Application Scope Characteristics,“ 38th International Conference on Computer Safety, Reliability and Security (SAFECOMP), 2019, doi: 10.1007/978-3-030-26601-1_11.

[2] Jöckel, L., Kläs, M., Martínez-Fernández, S., „Safe Traffic Sign Recognition through Data Augmentation for Autonomous Vehicles Software,“ 19th IEEE International Conference on Software Quality, Reliability and Security Companion (QRS-C), 2019, doi: 10.1109/QRS-C.2019.00114.

[3] Gatys, L. A., Ecker, A. S., Bethge, M., „Image Style Transfer Using Convolutional Neural Networks,“ 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, doi: 10.1109/CVPR.2016.265.

[4] Zhang, F., Wang, C., „MSGAN: Generative Adversarial Networks for Image Seasonal Style Transfer,“ in IEEE Access, vol. 8, pp. 104830-104840, 2020, doi: 10.1109/ACCESS.2020.2999750.

[5] Automold library, https://github.com/UjjwalSaxena/Automold–Road-Augmentation-Library, accessed 24.07.2024.